Artificial Intelligence

Is Artificial Intelligence Antifragile?

The iatrogenic potential of human intervention in artificial intelligence

Posted September 17, 2018

We are in the midst of the Artificial Intelligence Revolution (AIR), the next major epoch in the history of technological innovation. Artificial intelligence (AI) is globally gaining momentum not only in scientific research, but also in business, finance, consumer, art, healthcare, esports, pop culture, and geopolitics. As AI becomes increasingly pervasive, it is important to examine at a macro level whether AI gains from disorder. Antifragile is a term and concept put forth by Nassim Nicholas Taleb, a former quantitative trader and self-proclaimed “flâneur” turned author of New York Times bestseller of “The Black Swan: The Impact of the Highly Improbable.” Taleb describes antifragile as the “exact opposite of fragile” which is “beyond resilience or robustness” in “Antifragile: Things That Gain From Disorder.” According to Taleb, antifragile things not only “gain from chaos,” but also “need it in order to survive and flourish.” Is AI antifragile? The answer may not be as intuitive as it seems.

The recent advances in AI are largely due to the improvement in pattern recognition abilities via deep learning, a subset of machine learning, which is a method of AI that does not require explicit programming. The learning is achieved by feeding data sets through two or more layers of nonlinear processing. The higher the volume and faster the throughput processing of data, the faster the computer learns.

Faster processing is achieved mostly through the parallel processing capabilities of the GPU (Graphics Processing Units), versus the serial processing of CPUs (Central Processing Unit). Interestingly, computer gaming has helped accelerate the advancements in deep learning, and therefore also play a role in the current AI boom. GPUs, originally mostly used for rendering of graphics for computer games, are now an integral part of deep learning architecture. To illustrate, imagine there are three ice-cream carts with customers lined up at each one, and only one scooper. In serial processing, the scooper aims to finish serving all of the carts at the same time and does so by bouncing between carts scooping out a few cones at a time before servicing the next. In parallel processing, there are multiple scoopers, instead of just one. A savvy customer will divide up the order among the carts at the same time to achieve faster results.

Deep learning gains from a high volume and wide assortment of data. Access to large and diverse data sets, complete with outliers, is imperative in minimizing biased or low-quality output. Drinking from the proverbial firehose of data is not a stressor for deep learning, but rather a desired scenario. Machine learning thrives on big data and brings order from the chaos of information. AI deep learning gains from data diversity.

The caveat to deep learning’s inherent antifragility is the potential risk of accidental adverse outcomes resulting from iatrogenic effects from faulty human management. In “Antifragile”, Taleb uses the term “iatrogenic” in place of the phrase “harmful unintended side effects” that result from “naïve interventionism.” Although AI is a machine-based system, it is ultimately created and managed by humans. There are many potential unintended consequences that could result from human intervention of the AI deep learning data set and algorithms. Put moldy bread in the world’s best performing toaster still results in suboptimal toast.

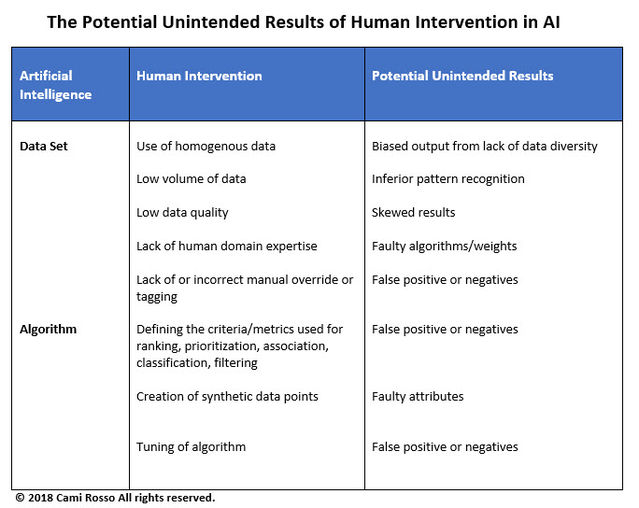

The classic computer science adage of “Garbage In, Garbage Out” (GIGO) resonates with deep learning. Human operators largely decide the data set size, source(s), selection, timing, frequency, tagging, and manual overrides to the AI system. Human programmers that create the AI algorithms define the criteria and metrics used for system ranking, prioritization, association, classification and filtering. When there is a notable data point that is absent, programmers may intervene by creating synthetic data points. Human programmers make decisions on tuning algorithms. The potential iatrogenic effect of human intervention may include biased output, inferior pattern recognition, skewed results, faulty algorithms, inappropriate weights, inaccurate attributes, and false positives or negatives. Put fresh bread in the toaster, but adjust the heating level too high or low during the process may produce unintended results such as burnt or undercooked toast. So although AI deep learning is antifragile, it is the human-factor that tends to be fragile.

This raises the question of whether artificial intelligence can be used to replace the points of human intervention — a self-regulating AI system. In theory, this is possible. AI can be created to produce and manage other AI programs. For example, individual specialized AI programs can be created to focus on tasks such as selecting data for training sets, flagging data outliers, predicting false positives or negatives, suggesting synthetic data points for algorithms, and many other functions. Envision a main AI system that manages a network of specialized AI programs. During processing, the overarching AI activates the specialist AI to perform the task that once required human intervention.

This is a large, complex system, and there are many caveats to this approach of self-regulating AI deep learning. Among the most disquieting attributes of a self-regulating AI deep learning system is the increased possibility of the “butterfly effect” — the concept that small differences in a dynamic system could trigger vast, widespread consequences. Edward Lorenz, an MIT Professor of meteorology, is the father of the chaos theory and butterfly effect. The term butterfly effect was put forth by Lorenz in his paper “Predictability: Does the Flap of a Butterfly's Wings in Brazil Set Off a Tornado in Texas?” [1]. In this case, the small amount of human intervention in establishing a self-regulating AI system in any of its modular AI component programs could cause massive differences in the overall AI system output.

So now we have established that although AI deep learning technology itself seems inherently antifragile, its Achilles heel, the potential iatrogenic effects from human intervention, is a source of systemic vulnerabilities and fragility. The creation of a self-regulating AI system is even more fragile, given its susceptibility to the Lorenz butterfly effect — any human errors in the initial system set-up in any of its initial components, whether it be data or algorithmic, could cause massive output errors. The best designed AI algorithms with the best data sets are still prone to human fragility. AI will be inherently fragile. The manner in which humans manage the AI system will determine its overall robustness and fault-tolerance. The future success of AI will depend on the ability of man and machine to “learn” best practices over time together — evolving in symbiosis.

Copyright © 2018 Cami Rosso All rights reserved.

References

1. Dizikes, Peter. “When the Butterfly Effect Took Flight.” MIT Technology Review. February 22, 2011.